Equilibrium

Most of us struggle to accept one thing about existence, namely entropy, which translated into human terms says that if you let inertia take over, everything will end up in a state of lifeless disorder.

Two paths lead to that end state, which we might see as a form of equilibrium: centralization/standardization and randomness/individualism. In the former, everyone does the same thing, Soviet-style; in the latter, people act for their own whims and notions, anarchy-style.

Equilibrium makes sense when you are mixing components. Over time, the two merge and become a uniform new thing. Much as equality makes sense in arithmetic but not politics however, the concept does not carry over well to populations or activities.

Great empires tend to die of equilibrium. They both standardize behavior in order to eliminate methods that cause instability, much as we ban murder or heroin use, and allow citizens to do whatever they want otherwise, which means unity fragments into many special interests and chaotic individual behaviors.

If you look at your average hipster colony — Williamsburg, NY or Austin, TX — you see a great diversity of surface behaviors. Hipsters collect vintage coffee grinders, paint only in purple, or ride unique vehicles. They all still work in coffee shops, collect the dole, and smoke bad quality weed.

In contrast to this tendency, we could look at the ecosystem model for something more essential. In the ecosystem model, the system aims for balance over time instead of uniformity. It does not act in unison, but in inequality and back-and-forth.

This conflict plays out in ecosystems within nature and our understanding of them:

In the mid-1990s, a young biologist named Suzanne Simard, now at the University of British Columbia, decided to test this concept in a forest. “Some people thought I was crazy,” Simard (who did not respond to multiple interview requests) said in a 2016 TED talk. For her doctoral project at Oregon State University, she took carbon dioxide with radioactive carbon isotopes and injected it into bags installed around pint-size birch trees growing near Douglas fir seedlings. After a little while, she ran a Geiger counter along the Douglas fir trees, and the device beeped like crazy. Moreover, she found that the radioactive carbon could also flow from the Douglas firs to the birches if she planted the bags near the firs. She had discovered that the trees shared carbon via underground networks. Her findings, published in Nature in 1997, lit a fire under scientists and the public alike.

In describing what she found, Simard has emphasized the cooperation she views as inherent in nature. “A forest is a cooperative system, and if it were all about competition, then it would be a much simpler place,” she said to Yale Environment 360 in 2016. “Why would a forest be so diverse? Why would it be so dynamic?” In her TED talk, Simard referred to forests as “supercooperators” and made the bold assertion that trees don’t just cooperate but communicate. She described birches and Douglas firs as engaging in a “lively two-way conversation” mediated by their underground collaborators. “I had found solid evidence of this massive belowground communications network,” she said, adding later in the talk, “Through back and forth conversations, they increase the resilience of the whole community.”

But to Kiers, the benevolent, cooperation-focused view promoted by thinkers like Margulis and Simard was suspect. Kiers saw instead a world ordered by individual interests, where potential cheaters lurked everywhere and species needed complex strategies to keep their trading partners in line.

“I had this realization … that I’m less interested in cooperation and I’m actually much more interested in the tension,” Kiers said. “I think there’s an underappreciation of how tension drives innovation. Cooperation to me suggests a stasis.”

As she soon learned, the legume-bacteria interaction is not so simple. A single legume plant can host 10 or more strains of bacteria. To Kiers, this evoked a concept from the ecologist Garrett Hardin, whose famous 1968 essay in Science, “The Tragedy of the Commons,” argued that individuals pursuing their own interests can destroy a common environment or resource. The legume plant itself could be seen as a commons, and any given bacterial strain could hoard nitrogen while continuing to feast on the plant’s sugars.

Kiers surrounded some nodules on soybean plants with an almost nitrogen-free air supply, making the bacteria in those nodules useless to the plant. She found that the plant reacted by shutting off the supply of oxygen to those bacteria, drastically reducing their reproduction. It seemed the relationship between the bacteria and the soybeans, far from being a happy friendship, was an uneasy détente, with the plant imposing crippling sanctions on any bacterial partners that failed to earn their keep. The paper, which was published in Nature before Kiers even received her doctorate, made a huge splash.

The tragedy of the commons shows us the chaotic view: everyone does what benefits them exclusively, and this wears down any shared resources. Over time, a situation like heat-death emerges, where everyone has as much as they can get and nothing transfers, so everyone stays in that situation.

This resembles both the subsistence-living economies of the third world and the moribund inbred socialist economy of the late Soviet Union, where stagnation overwhelmed not just growth but also maintenance of the system, leading to a gradual loss of energy and productivity.

Ecosystems show us balance through the give and take over time. This keeps everything finely tuned, and eliminates both the problem of standardization, or everyone doing exactly the same thing, and the downsides of individualism, which leads to a tragedy of the commons.

That enables a system to become perpetual, balancing itself through constant adjustment by independent actors operating by the simplest possible agenda, self-interest. Unlike the categorical thinking of humans, it consists of many localized and particular acts.

In the end, we are adjusting to not just biology, but the underlying thermodynamics:

[T]he laws of thermodynamics make possible the characterization of a given sample of matter — after it has settled down to equilibrium with all parts at the same temperature—by ascribing numerical measures to a small number of properties (pressure, volume, energy, and so forth). One of these is entropy. As the temperature of the body is raised by adding heat, its entropy as well as its energy is increased.

On the other hand, when a volume of gas enclosed in an insulated cylinder is compressed by pushing on the piston, the energy in the gas increases while the entropy stays the same or, usually, increases a little. In atomic terms, the total energy is the sum of all the kinetic and potential energies of the atoms, and the entropy, it is commonly asserted, is a measure of the disorderly state of the constituent atoms. The heating of a crystalline solid until it melts and then vaporizes is a progress from a well-ordered, low-entropy state to a disordered, high-entropy state.

The principal deduction from the second law of thermodynamics (or, as some prefer, the actual statement of the law) is that, when an isolated system makes a transition from one state to another, its entropy can never decrease. If a beaker of water with a lump of sodium on a shelf above it is sealed in a thermally insulated container and the sodium is then shaken off the shelf, the system, after a period of great agitation, subsides to a new state in which the beaker contains hot sodium hydroxide solution. The entropy of the resulting state is higher than the initial state, as can be demonstrated quantitatively by suitable measurements.

It is possible, however, that in the course of time the universe will suffer “heat death,” having attained a condition of maximum entropy, after which tiny fluctuations are all that will happen.

Consider then that the same can apply to information, meaning that it is a property of order itself, as manifested in information entropy:

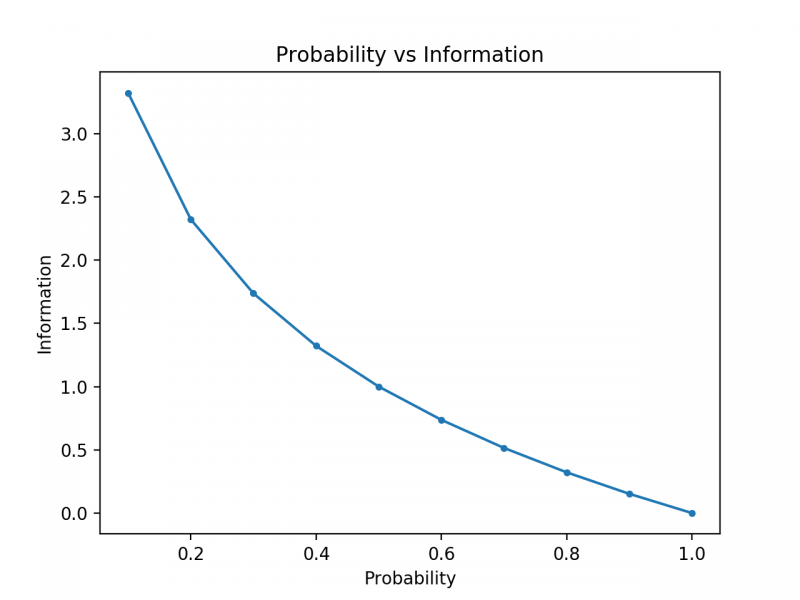

We can calculate the amount of information there is in an event using the probability of the event. This is called “Shannon information,” “self-information,” or simply the “information.”

Consider a flip of a single fair coin. The probability of heads (and tails) is 0.5…

To make this clear, we can calculate the information for probabilities between 0 and 1 and plot the corresponding information for each. We can then create a plot of probability vs information. We would expect the plot to curve downward from low probabilities with high information to high probabilities with low information.

We can see the expected relationship where low probability events are more surprising and carry more information, and the complement of high probability events carry less information.

In other words, repetition conveys lower information, which can apply to centralization in that everything is repeated in unison, or chaotic individualism, in which events differ on the surface but in order to support that, converge on conformity.

The more the hipsters in Austin attempt to be unique, the more they become “uniformly unique” and therefore, the means by which they are attempting to be unique convey less information. This is why we can recognize them as a genre despite their many different quirks and personality accents.

If you want proof that the universe is alive, consider the paradox of information entropy. Without it, the universe would converge on repetition; with it, the universe is able to exist in a static state of perpetuation, despite having constant internal change.

Even more, the value in dispensing entropy of unique patterns — actually unique, not conformist non-conformist hipsters — suggests that as Plato argued, there may be a dimension at which information itself exists as a cause of material patterns.

The ecosystem model comes as a shock to the human system, which prefers universalist (centralized/standardized) or individualist (random) orders, but points to a way that humans can exist in inequality for the greater good of all.

Tags: balance, entropy, equilibrium, heat death, time